I’ve been using the Postgres database engine for probably 10 years now, and despite having also used Oracle, DB2, Microsoft SQL Server, SQLite, MySQL, Access, FoxPro and others, it’s still by far and away my favourite.

In all my years of using it, I have never once encountered, or even heard of, an incident of data loss or integrity failure. It implements the SQL standard rigourously. And whatever I throw at it, it seems to just keep humming along.

And more than that, it’s extensible. Here are four extensions that take it from merely being the most solid, reliable relational database engine in existence, to also being an incredible modern application development platform. And there easy to install and get started with.

Install this by simply typing in psql

# CREATE EXTENSION "uuid-ossp";

Since discovering this, I’ve said ‘goodbye’ to SERIAL primary keys forever. Now, my table definitions frequently look like this:

CREATE TABLE thing(

uuid UUID PRIMARY KEY DEFAULT uuid_generate_v4(),

...

)

And there you go, items of type ‘thing’ will now be uniquely identifable GLOBALLY, as well as within his table. I can backup and restore the database without worrying about getting the serial generated correctly, I can merge data from two sources without worrying about conflicts, and I can use that UUID in other code, or in RESTFUL urls, and always be sure what I’m referring to.

Once again, this can be installed as follows:

# CREATE EXTENSION pgcrypto;

and then you can finally get your password management right, and free of all the embarrassing security errors that have plagued some popular sites in recent years.

I recommend the following structure for a username/password table:

CREATE TABLE app_user(

uuid UUID PRIMARY KEY DEFAULT uuid_generate_v4(), -- just as before!

email text not null,

hashed_password text not null ,

UNIQUE (email)

)

And then populate the hashed_password column by using the handy crypt function from pgcrypto

INSERT INTO app_user (email, hashed_password) VALUES (:email, crypt(:password, gen_salt(‘bf’) ) ) RETURNING uuid;

Then you can check user credentials like this:

SELECT EXISTS (SELECT uuid FROM app_user WHERE email=:email AND hashed_password=crypt(:password,hashed_password));

This has any number of security advantages, and avoids many common pitfalls:

- The algorithm has a tunable speed. By choosing a salt of type ‘bf’, the algorithm will be many thousands of times more resistant to brute-force attacks than once based on SHA1, and many many thousands more times resistant to one based on MD5 hashing. See here for more in-depth info.

- By creating a new salt for each user, and embedding it in the output hash, the same username/password combination will not result in the same output hash. So even if a malicious attacker had access to this table, he wouldn’t be able to perform hash lookups in rainbow tables. It’s frightening that many sites don’t use this approach yet.

- It’s been very thoroughly tested. Although I’ve written plenty of crypto code before, I’d always rather use a widely-tested, discussed and understood implementation. There are so many mistakes that are easy to make and hard to detect in the security world, using a solid open-source library like pgcrypo is just good practice

# CREATE EXTENSION hstore;

Hstore allows you to store arbitary key/value pairs in a database column. This is perfect for storing property bags, and in situations where you don’t know at design time exactly what the structure of your data is going to be.

Let’s extend our user table:

CREATE TABLE app_user(

uuid UUID PRIMARY KEY DEFAULT uuid_generate_v4(), -- just as before!

email text not null,

hashed_password text not null,

UNIQUE (email),

properties hstore

)

Now we can assign arbitrary properties to each user:

UPDATE app_user SET properties = ('twitter_id'=>'sir_tweetsalot', 'comments'=>'some notes here', 'follow_up'=>'1')

and then we can use that hstore field as follows:

SELECT * FROM app_user WHERE app_user.properties->'follow_up'

In fact, with UUID and HStore, Postgres is already looking like a pretty good NoSQL solution, but still with all the traditional SQL benefits of transactional integrity.

And then finally, plv8. I’m only beginning to discover how powerful this is, and it really deserves a post of its own. In brief, PLV8 allows you to write stored procedures in Javascript, Coffeescript or LiveScript.

There are all sorts of things that you could do with this. Suffice it to say, last month we were pretty proud of ourselves when we wrote our own dialect of LISP, wrote a parser for it in Coffeescript, and then got the whole thing running inside our Postgres database. Yeah, we were using Lisp on top of Coffeescript to filter SQL records. That’s how we do things around here!

And all this is before Postgres formally gets JSON as a standard data type. I can’t wait!

I did find a couple of silver linings in these events, though. Firstly, both of the vulnerabilities, although severe, were the result of genuine mistakes on the part of well meaning, under-resourced developers, who didn’t anticipate the consequences of some of their design decisions. And secondly, I was intensely proud of how quickly the open source community rallied to provide diagnostic tools, patches, tests, and guides. With a speed and efficiency that I’ve never seen in a large company, a bunch of unpaid volunteers provided the tools we needed to dig ourselves out of the mess.

I did find a couple of silver linings in these events, though. Firstly, both of the vulnerabilities, although severe, were the result of genuine mistakes on the part of well meaning, under-resourced developers, who didn’t anticipate the consequences of some of their design decisions. And secondly, I was intensely proud of how quickly the open source community rallied to provide diagnostic tools, patches, tests, and guides. With a speed and efficiency that I’ve never seen in a large company, a bunch of unpaid volunteers provided the tools we needed to dig ourselves out of the mess.

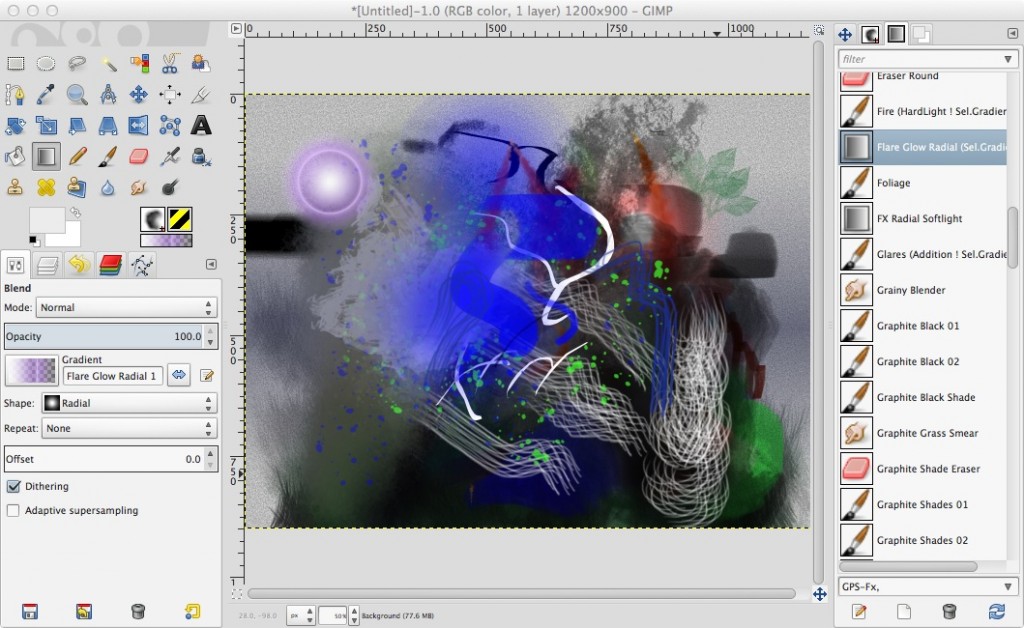

![css-cup_thumb[1]](/images/2013/07/css-cup_thumb1.jpg)